Stop bringing old practices to the cloud

When organizations migrate to the public cloud, they often mistakenly look at the cloud as “somebody else’s data center”, or “a suitable place to run a disaster recovery site”, hence, bringing old practices to the public cloud.

In the blog, I will review some of the common old (and perhaps bad) practices organizations are still using today in the cloud.

Mistake #1 — The cloud is cheaper

I often hear IT veterans comparing the public cloud to the on-prem data center as a cheaper alternative, due to versatile pricing plans and cost of storage.

In some cases, this may be true, but focusing on specific use cases from a cost perspective is too narrow, and missing the benefits of the public cloud — agility, scale, automation, and managed services.

Don’t get me wrong — cost is an important factor, but it is time to look at things from an efficiency point of view and embed it as part of any architecture decision.

Begin by looking at the business requirements and ask yourself (among others):

- What am I trying to achieve, what capabilities do I need, and then figure out which services will allow you to accomplish your needs?

- Do you need persistent storage? Great. What are your data access patterns? Do you need the data to be available in real time, or can you store data in an archive tier?

- Your system needs to respond to customers’ requests — does your application need to provide a fast response to API calls, or is it ok to provide answers from a caching service, while calls are going through an asynchronous queuing service to fetch data?

Mistake #2 — Using legacy architecture components

Many organizations are still using legacy practices in the public cloud — from moving VMs in a “lift & shift” pattern to cloud environments, using SMB/CIFS file services (such as Amazon FSx for Windows File Server, or Azure Files), deploying databases on VMs and manually maintaining them, etc.

For static and stable legacy applications, the old practices will work, but for how long?

Begin by asking yourself:

- How will your application handle unpredictable loads? Autoscaling is great, but can your application scale down when resources are not needed?

- What value are you getting by maintaining backend services such as storage and databases?

- What value are you getting by continuing to use commercial license database engines? Perhaps it is time to consider using open-source or community-based database engines (such as Amazon RDS for PostgreSQL, Azure Database for MySQL, or OpenSearch) to have wider community support and perhaps be able to minimize migration efforts to another cloud provider in the future.

Mistake #3 — Using traditional development processes

In the old data center, we used to develop monolith applications, having a stuck of components (VMs, databases, and storage) glued together, making it challenging to release new versions/features, upgrade, scale, etc.

The more organizations began embracing the public cloud, the shift to DevOps culture, allowed organizations the ability to develop and deploy new capabilities much faster, using smaller teams, each own specific component, being able to independently release new component versions, and take the benefit of autoscaling capability, responding to real-time load, regardless of other components in the architecture.

Instead of hard-coded, manual configuration files, pruning to human mistakes, it is time to move to modern CI/CD processes. It is time to automate everything that does not require a human decision, handle everything as code (from Infrastructure as Code, Policy as Code, and the actual application’s code), store everything in a central code repository (and later on in a central artifact repository), and be able to control authorization, auditing, roll-back (in case of bugs in code), and fast deployments.

Using CI/CD processes, allows us to minimize changes between different SDLC stages, by using the same code (in code repository) to deploy Dev/Test/Prod environments, by using environment variables to switch between target environments, backend services connection settings, credentials (keys, secrets, etc.), while using the same testing capabilities (such as static code analysis, vulnerable package detection, etc.)

Mistake #4 — Static vs. Dynamic Mindset

Traditional deployment had a static mindset. Applications were packed inside VMs, containing code/configuration, data, and unique characteristics (such as session IDs). In many cases architectures kept a 1:1 correlation between the front-end component, and the backend, meaning, a customer used to log in through a front-end component (such as a load-balancer), a unique session ID was forwarded to the presentation tier, moving to a specific business logic tier, and from there, sometimes to a specific backend database node (in the DB cluster).

Now consider what will happen if the front tier crashes or is irresponsive due to high load. What will happen if a mid-tier or back-end tier is not able to respond on time to a customer’s call? How will such issues impact customers’ experience having to refresh, or completely re-login again?

The cloud offers us a dynamic mindset. Workloads can scale up or down according to load. Workloads may be up and running offering services for a short amount of time, and decommission when not required anymore.

It is time to consider immutability. Store session IDs outside compute nodes (from VMs, containers, and Function-as-a-Service).

Still struggling with patch management? It’s time to create immutable images, and simply replace an entire component with a newer version, instead of having to pet each running compute component.

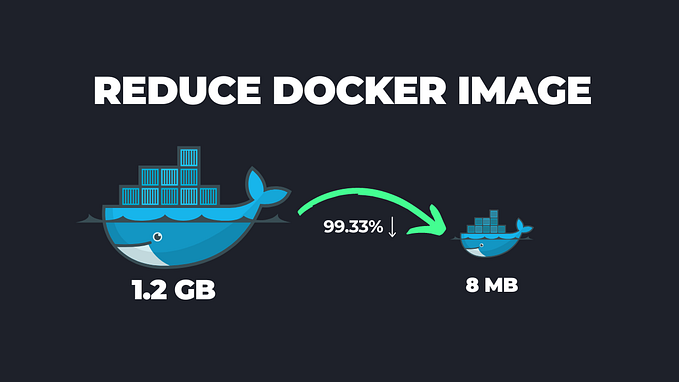

Use CI/CD processes to package compute components (such as VM or container images). Keep artifacts as small as possible (to decrease deployment and load time).

Regularly scan for outdated components (such as binaries and libraries), and on any development cycle update the base images.

Keep all data outside the images, on a persistent storage — it is time to embrace object storage (suitable for a variety of use cases from logging, data lakes, machine learning, etc.)

Store unique configuration inside environment variables, loaded at deployment/load time (from services such as AWS Systems Manager Parameter Store or Azure App Configuration), and for variables containing sensitive information use secrets management services (such as AWS Secrets Manager, Azure Key Vault, or Google Secret Manager).

Mistake #5 — Old observability mindset

Many organizations migrated workloads to the public cloud, still kept their investment in legacy monitoring solutions (mostly built on top of deployed agents), and shipping logs (from application, performance, security, etc.) from the cloud environment back to on-prem, without considering the cost of egress data from the cloud, or the cost to store vast amounts of logs generated by the various services in the cloud, in many cases still based on static log files, and sometimes even based on legacy protocols (such as Syslog).

It is time to embrace a modern mindset. It is fairly easy to collect logs from various services in the cloud (as a matter of fact, some logs such as audit logs are enabled for 90 days by default).

Time to consider cloud-native services — from SIEM services (such as Microsoft Sentinel or Google Security Operations) to observability services (such as Amazon CloudWatch, Azure Monitor, or Google Cloud Observability), capable of ingesting (almost) infinite amount of events, streaming logs and metrics in near real-time (instead of still using log files), and providing an overview of entire customers service (made out of various compute, network, storage and database services).

Speaking about security — the dynamic nature of cloud environments does not allow us to keep legacy systems scanning configuration and attack surface in long intervals (such as 24 hours or several days) just to find out that our workload is exposed to unauthorized parties, that we made a mistake leaving configuration in a vulnerable state (still deploying resources expose to the public Internet?), or kept our components outdated?

It is time to embrace automation and continuously scan configuration and authorization, and gain actionable insights on what to fix, as soon as possible (and what is vulnerable, but not directly exposed from the Internet, and can be taken care of at lower priority).

Mistake #6 — Failing to embrace cloud-native services

This is often due to a lack of training and knowledge about cloud-native services or capabilities.

Many legacy workloads were built on top of 3-tier architecture since this was the common way most IT/developers knew for many years. Architectures were centralized and monolithic, and organizations had to consider scale, and deploy enough compute resources, many times in advance, failing to predict spikes in traffic/customer requests.

It is time to embrace distributed systems, based on event-driven architectures, using managed services (such as Amazon EventBridge, Azure Event Grid, or Google Eventarc), where the cloud provider takes care of load (i.e., deploying enough back-end compute services), and we can stream events, and be able to read events, without having to worry whether the service will be able to handle the load.

We can’t talk about cloud-native services without mentioning functions (such as AWS Lambda, Azure Functions, or Cloud Run functions). Although functions have their challenges (from vendor opinionated, maximum amount of execution time, cold start, learning curve, etc.), they have so much potential when designing modern applications. To name a few examples where FaaS is suitable we can look at real-time data processing (such as IoT sensor data), GenAI text generation (such as text response for chatbots, providing answers to customers in call centers), video transcoding (such as converting videos to different formats of resolutions), and those are just small number of examples.

Functions can be suitable in a microservice architecture, where for example one microservice can stream logs to a managed Kafka, some microservices can be trigged to functions to run queries against the backend database, and some can store data to a persistent datastore in a fully-managed and serverless database (such as Amazon DynamoDB, Azure Cosmos DB, or Google Spanner).

Mistake #7 — Using old identity and access management practices

No doubt we need to authenticate and authorize every request and keep the principle of least privileged, but how many times we have seen bad malpractices such as storing credentials in code or configuration files? (“It’s just in the Dev environment; we will fix it before moving to Prod…”)

How many times we have seen developers making changes directly on production environments?

In the cloud, IAM is tightly integrated into all services, and some cloud providers (such as the AWS IAM service) allow you to configure fine-grained permissions up to specific resources (for example, allow only users from specific groups, who performed MFA-based authentication, access to specific S3 bucket).

It is time to switch from using static credentials to using temporary credentials or even roles — when an identity requires access to a resource, it will have to authenticate, and its required permissions will be reviewed until temporary (short-lived / time-based) access is granted.

It is time to embrace a zero-trust mindset as part of architecture decisions. Assume identities can come from any place, at any time, and we cannot automatically trust them. Every request needs to be evaluated, authorized, and eventually audited for incident response/troubleshooting purposes.

When a request to access a production environment is raised, we need to embrace break-glass processes, making sure we authorize the right number of permissions (usually for members of the SRE or DevOps team), and permissions will be automatically revoked.

Mistake #8 — Rushing into the cloud with traditional data center knowledge

We should never ignore our team’s knowledge and experience.

Rushing to adopt cloud services, while using old data center knowledge is prone to failure — it will cost the organization a lot of money, and it will most likely be inefficient (in terms of resource usage).

Instead, we should embrace the change, learn how cloud services work, gain hands-on practice (by deploying test labs and playing with the different services in different architectures), and not be afraid to fail and quickly recover.

To succeed in working with cloud services, you should be a generalist. The old mindset of specialty in certain areas (such as networking, operating systems, storage, database, etc.) is not sufficient. You need to practice and gain wide knowledge about how the different services work, how they communicate with each other, what are their limitations, and don’t forget — what are their pricing options when you consider selecting a service for a large-scale production system.

Do not assume traditional data center architectures will be sufficient to handle the load of millions of concurrent customers. The cloud allows you to create modern architectures, and in many cases, there are multiple alternatives for achieving business goals.

Keep learning and searching for better or more efficient ways to design your workload architectures (who knows, maybe in a year or two there will be new services or new capabilities to achieve better results).

Summary

There is no doubt that the public cloud allows us to build and deploy applications for the benefit of our customers while breaking loose from the limitations of the on-prem data center (in terms of automation, scale, infinite resources, and more).

Embrace the change by learning how to use the various services in the cloud, adopt new architecture patterns (such as event-driven architectures and APIs), prefer managed services (to allow you to focus on developing new capabilities for your customers), and do not be afraid to fail — this is the only way you will gain knowledge and experience using the public cloud.

About the author

Eyal Estrin is a cloud and information security architect, an AWS Community Builder, and the author of the books Cloud Security Handbook and Security for Cloud Native Applications, with more than 20 years in the IT industry.

You can connect with him on social media (https://linktr.ee/eyalestrin).

Opinions are his own and not the views of his employer.

Thank you for being a part of the community

Before you go:

- Be sure to clap and follow the writer ️👏️️

- Follow us: X | LinkedIn | YouTube | Newsletter | Podcast

- Check out CoFeed, the smart way to stay up-to-date with the latest in tech 🧪

- Start your own free AI-powered blog on Differ 🚀

- Join our content creators community on Discord 🧑🏻💻

- For more content, visit plainenglish.io + stackademic.com